Overview

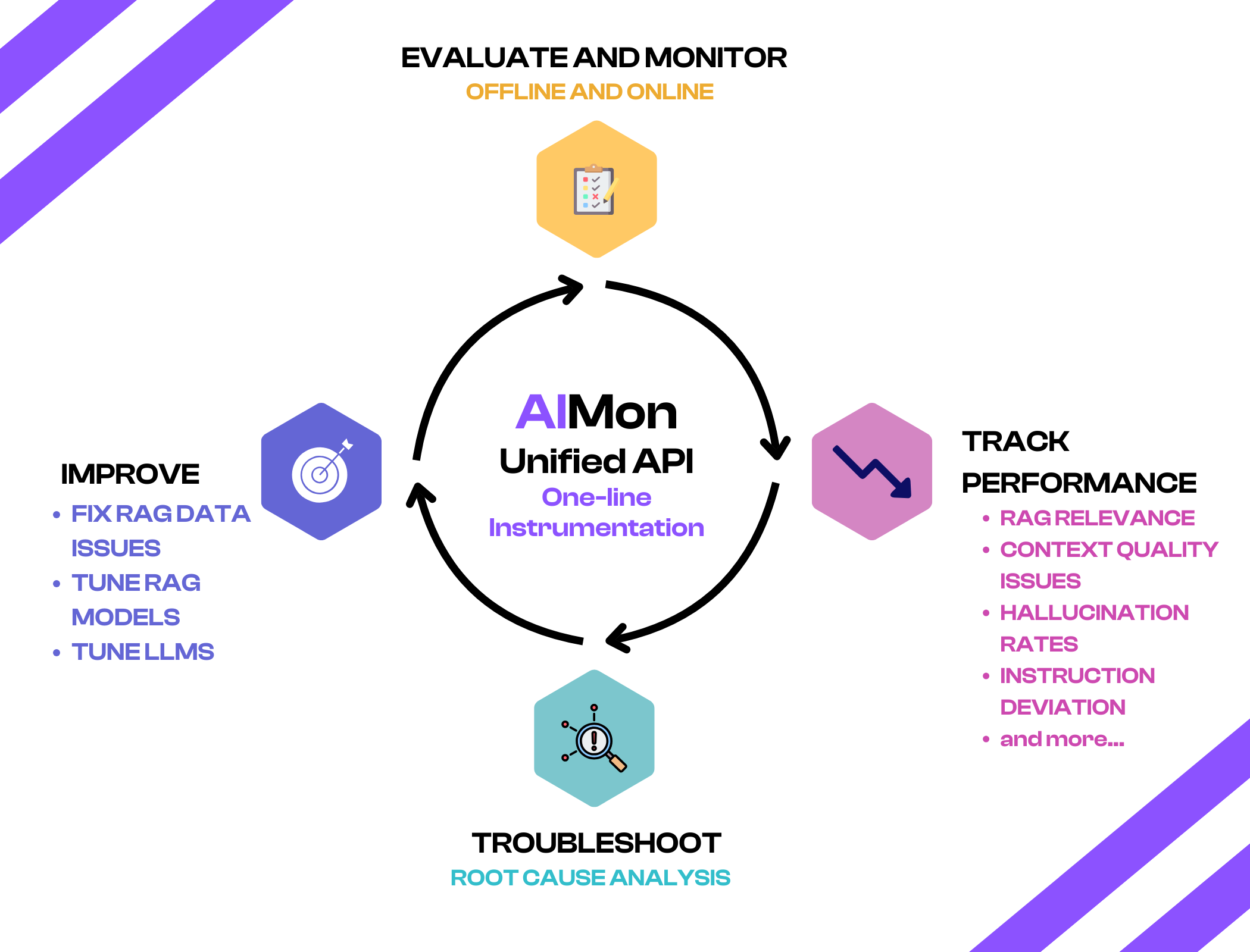

AIMon is an LLM and RAG improvement platform that helps you:

- Evaluate your LLM Apps and test how different datasets, vector DBs, or LLM models impact your metrics

- Continuously monitor how your LLM apps and RAG systems work in production

- Troubleshoot why Hallucinations, Instruction deviation, and other issues happen

- Improve your apps incrementally with the feedback from AIMon

using a single Unified API.

Deployment Options

AIMon is available to deploy on-premise and as a hosted solution. It is a fast process to deploy in your VPC across the different CSPs. AIMon is built as a modular solution. It means that you can purchase and deploy using the following modes:

- Single Detection Models including Hallucination, Instruction Adherence, and Context quality.

- Multiple Detection Models along with an API orchestrator

- Complete Platform usable with an open-source SDKs, Unified API, one-line instrumentation, and an enterprise-ready UI application

All of our models have been trained on a variety of datasets but at the same time are completely customizable for your specific needs.

Our Team

Our team comprises of ML engineers, researchers, and product leaders from Netflix, Appdynamics, Stanford AI Lab, and NYU who are building ground up innovation focused on reliable Enterprise adoption of LLMs.

Who is AIMon for?

AIMon is built for pioneering LLM Application builders focused on increasing accuracy and quality of their RAG retrieval and LLM outputs. If you are an ML engineer, researcher, front-end or back-end engineer, and are working on LLM projects such as chatbots or summarization apps, you can use AIMon to incrementally improve your apps.

What AIMon is not

- AIMon does not call your LLMs to evaluate your outputs. We build our own models.

- AIMon is not an LLM provider. Neither do we host LLMs for our customers.

- AIMon is not a prompt management or an LLM IDE tool.

- AIMon is not well-suited for closed-book LLM apps. We work well in enterprise use-cases where contextual enrichment such as RAGs is used.

Our Goal

We are laser-focused on optimizing your LLM Apps and help you ship them with supreme confidence. That is why we work very closely with our LLM App builders and help them achieve their goals of instrumenting, discovering, and fixing quality problems with their specific LLM apps. Here is a quick way to understand the common ways in which our customers use us:

Metrics

At the core of AIMon's platform are our proprietary metrics, seamlessly accessible via a single call to our Unified API.

Your LLM App and AIMon

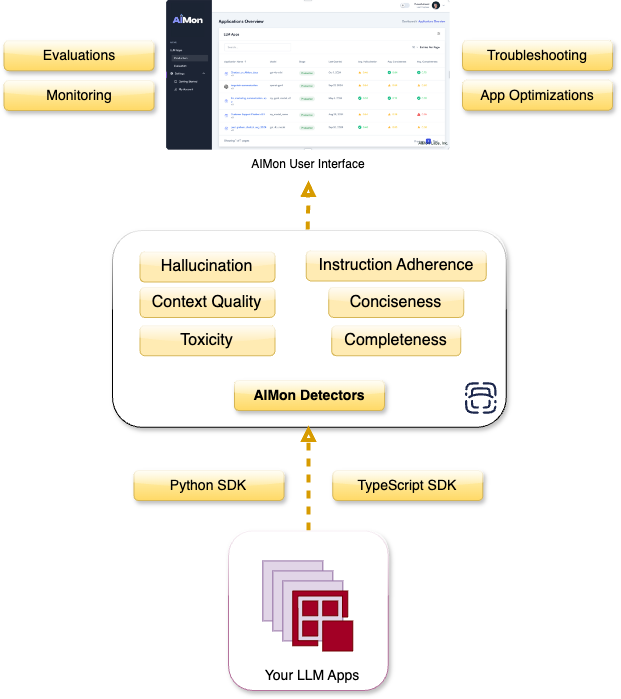

Here is a reference architecture of how AIMon supports your LLM App Development. AIMon components are shown in Yellow. You can use our SDKs to instrument your app with AIMon either real-time or asyncronously. AIMon then detects issues with your LLM app and pinpoints root causes behind your LLM hallucinations.

AIMon components are shown in Yellow.

Let us take a close look at the AIMon components now. The following diagram describes how data is sent from your app to AIMon and how AIMon can help improve your LLM app.

AIMon high-level architecture.

What's next?

- Go to the Quick Start guide to get started with AIMon.

- Read about the different concepts of the AIMon Platform using the Glossary.

- Check out example applications that use AIMon's detectors: