Hallucination

AIMon's latest hallucination detection model, HDM-2, improves significantly over HDM-1 by delivering higher accuracy, lower latency, and better handling of subtle factual inconsistencies in LLM-generated outputs. Explore HDM-2 on GitHub | Hugging Face

What is a Hallucination?

A hallucination refers to any part of a large language model (LLM) output that is not grounded in fact. This includes content that is inaccurate, fabricated, or inconsistent with known or provided information.

Such errors pose serious risks in domains like healthcare, finance, law, or regulatory reporting where factual precision and trustworthiness of model outputs are critical.

The hallucination detection model assigns a calibrated severity score between 0.0 (fully grounded) and 1.0 (highly hallucinated), with additional token- and sentence-level annotations to highlight specific problem areas.

Types of Hallucinations

Hallucinations vary in form depending on what kind of knowledge they contradict or invent. The categories below help classify these distinctions and guide interpretation of the model's output.

| Type | Description | Example |

|---|---|---|

| Context-Based | Contradicts or is unsupported by the provided context | “The trial enrolled 800 patients.” (Actual: 573) |

| Common Knowledge | Violates widely accepted facts | “Venus is the closest planet to the Sun.” |

| Fabrication | Invented, plausible-sounding content with no factual basis | “Cristiano Ronaldo is a cricket player.” |

| Enterprise Knowledge | References domain-specific facts not present in context (Enterprise only) | “Northbridge has led enrollments since 2020.” |

Performance Metrics

HDM-2 has been benchmarked extensively to validate its robustness and accuracy in detecting hallucinations across diverse tasks and domains.

Accuracy Benchmarks

The model consistently outperforms existing baselines on standard evaluation datasets as well as AIMon's novel open-source data set, HDM-BENCH, designed to evaluate context-based and common knowledge hallucinations.

| Dataset | Precision | Recall | F1 Score |

|---|---|---|---|

| HDM-Bench | 0.87 | 0.84 | 0.855 |

| TruthfulQA | 0.82 | 0.78 | 0.80 |

| RagTruth | 0.85 | 0.81 | 0.83 |

What do Precision, Recall, and F1 score mean?

- Precision: The proportion of predicted hallucinations that were actually hallucinations (i.e., how accurate the positive predictions are).

- Recall: The proportion of actual hallucinations that were correctly identified (i.e., how many true hallucinations were caught).

- F1 Score: The harmonic mean of precision and recall; a balanced measure of overall accuracy when both false positives and false negatives matter.

Latency Benchmarks

Designed for production use, HDM-2 delivers low-latency inference, making it suitable for real-time and agentic systems.

| Device | Avg Latency (s) | 95th Percentile (s) | Max Latency (s) |

|---|---|---|---|

| Nvidia A100 | 0.204 | 0.208 | 1.32 |

| Nvidia L4 (recommended) | 0.207 | 0.220 | 1.29 |

| Nvidia T4 | 0.935 | 1.487 | 1.605 |

| CPU | 261.92 | 350.76 | 356.96 |

Limitations

- Hallucination annotations related to common knowledge may include a degree of subjectivity, particularly when facts depend on interpretation or phrasing.

- Statements involving enterprise-specific knowledge (e.g., internal company facts, private data) are not flagged unless they are present in the provided context. These are only supported in the Enterprise version.

- HDM-2 currently supports English text only.

API Request & Response Example

- Request

- Response

[

{

"context": "Paul Graham is an English-born computer scientist, entrepreneur, venture capitalist, author, and essayist. He is best known for his work on Lisp, his former startup Viaweb (later renamed Yahoo! Store), co-founding the influential startup accelerator and seed capital firm Y Combinator, his blog, and Hacker News.",

"generated_text": "Paul Graham has worked in several key areas throughout his career: IBM 1401: He began programming on the IBM 1401 during his school years, specifically in 9th grade. In addition, he has also been involved in writing essays and sharing his thoughts on technology, startups, and programming.",

"config": {

"hallucination": {

"detector_name": "default"

}

}

}

]

[

{

"hallucination": {

"context_hallucinations": [

{

"score": 0.8809523809523809,

"text": "Paul Graham has worked in several key areas throughout his career: IBM 1401: He began programming on the IBM 1401 during his school years, specifically in 9th grade."

},

{

"score": 0.9565217391304348,

"text": "In addition, he has also been involved in writing essays and sharing his thoughts on technology, startups, and programming."

}

],

"high_scoring_words": [

[

[

165,

168

],

0.58831787109375,

" In"

],

[

[

177,

178

],

0.5969696044921875,

","

],

[

[

178,

181

],

0.58831787109375,

" he"

],

[

[

181,

185

],

0.68060302734375,

" has"

],

[

[

185,

190

],

0.663299560546875,

" also"

],

[

[

190,

195

],

0.6777191162109375,

" been"

],

[

[

195,

204

],

0.68060302734375,

" involved"

],

[

[

204,

207

],

0.6834869384765625,

" in"

],

[

[

207,

215

],

0.6892547607421875,

" writing"

],

[

[

215,

222

],

0.692138671875,

" essays"

],

[

[

222,

226

],

0.692138671875,

" and"

],

[

[

226,

234

],

0.6690673828125,

" sharing"

],

[

[

234,

238

],

0.5738983154296875,

" his"

],

[

[

238,

247

],

0.6027374267578125,

" thoughts"

],

[

[

247,

250

],

0.6719512939453125,

" on"

],

[

[

250,

261

],

0.65753173828125,

" technology"

],

[

[

261,

262

],

0.628692626953125,

","

],

[

[

262,

271

],

0.5912017822265625,

" startups"

],

[

[

271,

272

],

0.686370849609375,

","

],

[

[

272,

276

],

0.7123260498046875,

" and"

],

[

[

276,

288

],

0.605621337890625,

" programming"

]

],

"is_hallucinated": "False",

"score": 0.4638671875,

"sentences": [

{

"prediction": false,

"score": 0.66796875,

"text": "Paul Graham has worked in several key areas throughout his career: IBM 1401: He began programming on the IBM 1401 during his school years, specifically in 9th grade."

},

{

"prediction": false,

"score": 0.259765625,

"text": "In addition, he has also been involved in writing essays and sharing his thoughts on technology, startups, and programming."

}

]

}

}

]

The is_hallucinated field indicates whether the generated_text (passed in the input) is hallucinated. A top level score field indicates

if the entire paragraph contained any hallucinations. The score is a probability measure of how hallucinated

the text is compared to the context. The range of the score is [0, 1]. The higher the score, the more hallucinated the text is.

A score in the range 0.5-0.7 is considered a mild hallucination, while a score above 0.7 is considered a strong hallucination.

A score in the range of 0.4-0.5 is considered a borderline case of hallucination.

In most cases, we consider a score of 0.5 or higher as a hallucination. The sentences field contains the hallucinated sentences and their respective scores.

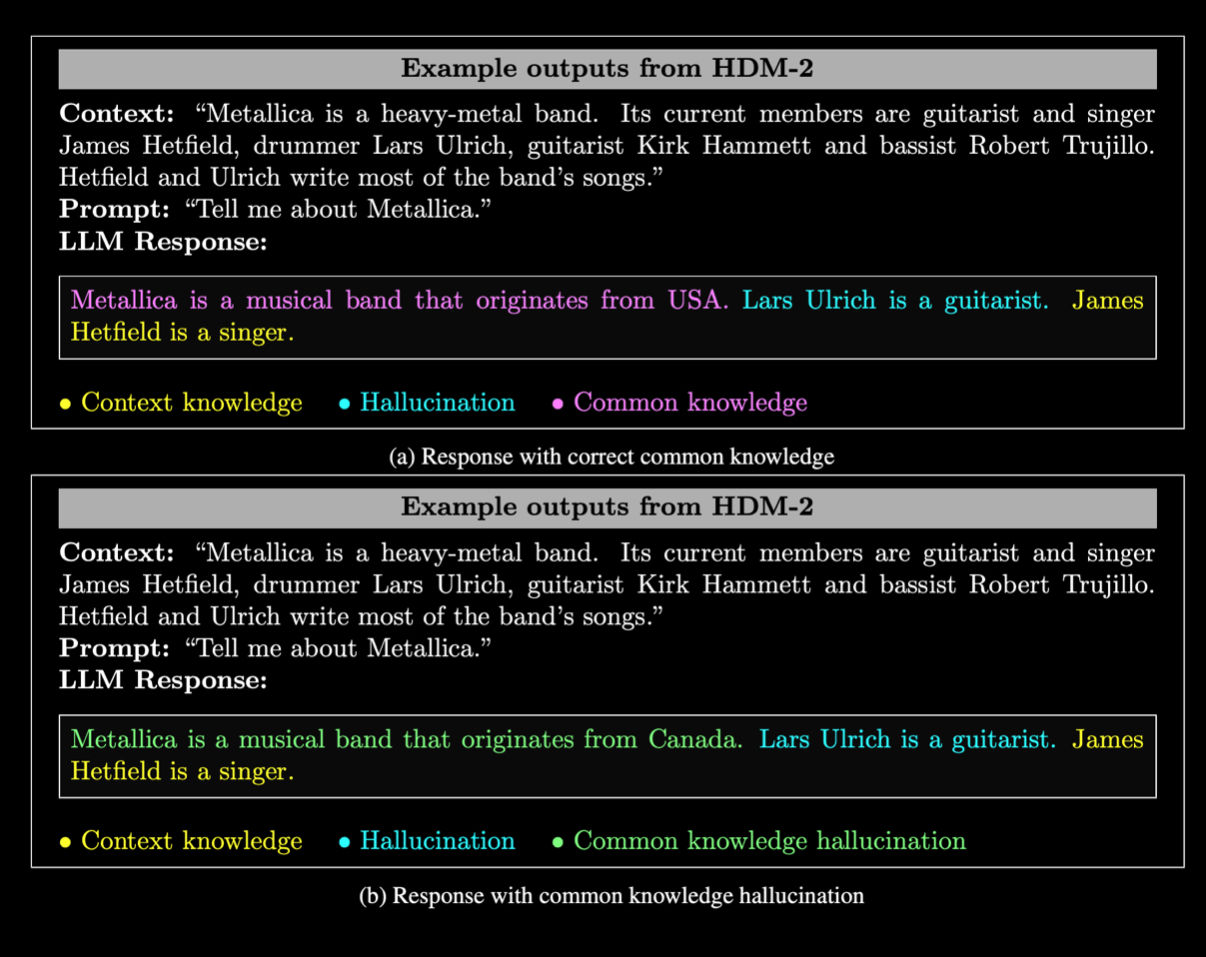

Example Annotated Hallucination

Code Example

The below example demonstrates how to use HDM-2 in a synchronous manner.

We also support asynchronous computation. Use async=True to use it asynchronously. When you set publish=True, the results are published to the AIMon UI.

- Python

- TypeScript

from aimon import Detect

import os

# This is a synchronous example

# Use async=True to use it asynchronously

# Use publish=True to publish to the AIMon UI

detect = Detect(

values_returned=['context', 'generated_text'],

config={"hallucination": {"detector_name": "default"}},

publish=True,

api_key=os.getenv("AIMON_API_KEY"),

application_name="my_awesome_llm_app",

model_name="my_awesome_llm_model"

)

@detect

def my_llm_app(context, query):

my_llm_model = lambda context, query: f'''I am a LLM trained to answer your questions.

But I hallucinate often.

The query you passed is: {query}.

The context you passed is: {context}.'''

generated_text = my_llm_model(context, query)

return context, generated_text

context, gen_text, aimon_res = my_llm_app("This is a context", "This is a query")

print(aimon_res)

import Client from "aimon";

// Create the AIMon client using an API Key (retrievable from the UI in your user profile).

const aimon = new Client({ authHeader: "Bearer API_KEY" });

const runDetect = async () => {

const generatedText = "your_generated_text";

const context = ["your_context"];

const userQuery = "your_user_query";

const config = { hallucination: { detector_name: "default" } };

// Analyze the quality of the generated output using AIMon

const response = await aimon.detect(

generatedText,

context,

userQuery,

config,

);

console.log("Response from detect:", response);

}

runDetect();

Advanced Features (available in the Enterprise plan)

The HDM-2 Enterprise version provides extended capabilities beyond the default model:

- Support for enterprise knowledge grounding, enabling hallucination detection based on internal or proprietary information not found in public data.

- Access to explanation traces for auditability and debugging.

- Lower latency variants and optimized performance for production environments.

- Extended domain coverage, including code, mathematical reasoning, and instruction adherence.

To explore enterprise features or request a license, contact info@aimon.ai