Hallucination

Hallucination is the presence of any statement in the LLM output that contradicts or violates the facts given to your LLM as context. Context is usually provided from a RAG (Retrieval Augmented Generation). These "hallucinated" sentences could be factual inaccuracies or fabrication of new information.

Factual inaccuracies manipulate original information and slightly misrepresent facts, for example, “Eiffel Tower is the tallest building in France" or “The first man on the moon was Yuri Gagarin”. LLMs are also known to completely fabricate plausible but false information which can't be traced back to original sources. For example, “Cristiano Ronaldo is a Cricket player”. This is different from the "Faithfulness" metric which is defined as the total number of correct facts in the output divided by the total number of facts in the context. Faithfulness is useful when you are interested in the percentage of truthful "facts" in the output compared to the facts in the input context. Hallucination on the other hand is a calibrated probability that gives you a better understanding of the magnitude of the hallucination problem in your LLM outputs. For instance a hallucination score closer to 0.0 indicates a low probability of hallucination and a score closer to 1.0 indicates a high probability of hallucination.

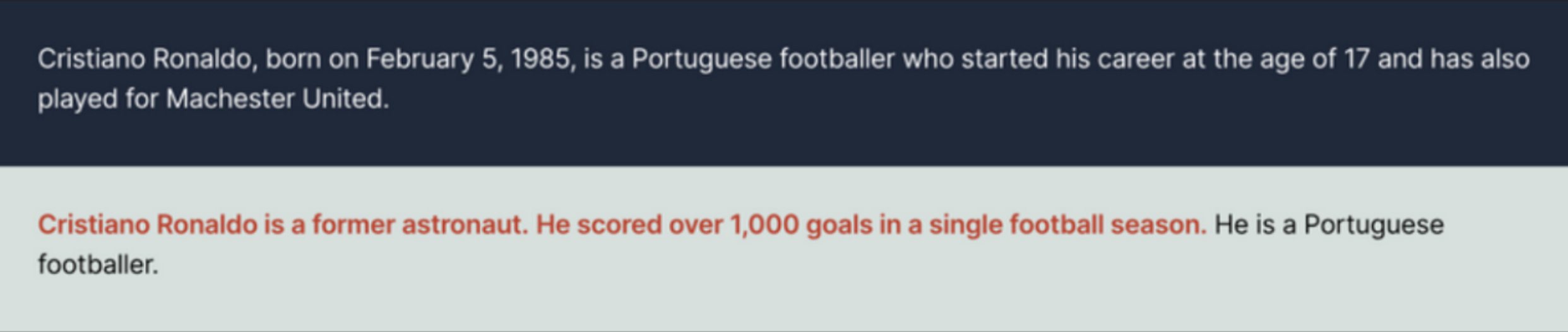

Here is an example of a given context and a hallucination:

Example of a contextual hallucination. Hallucinated sentences are marked in red.

Example of a contextual hallucination. Hallucinated sentences are marked in red.

Important Notes

- The hallucination detector operates on only english text.

- The input context should be pure text free of any special characters, HTML tags, or any other formatting. Currently, the hallucination detector is not designed to handle any special characters or formatting.

- It is important to note that the hallucination detector is not perfect and may not catch all hallucinations.

Example Request and Response

- Request

- Response

[

{

"context": "Paul Graham is an English-born computer scientist, entrepreneur, venture capitalist, author, and essayist. He is best known for his work on Lisp, his former startup Viaweb (later renamed Yahoo! Store), co-founding the influential startup accelerator and seed capital firm Y Combinator, his blog, and Hacker News.",

"generated_text": "Paul Graham has worked in several key areas throughout his career: IBM 1401: He began programming on the IBM 1401 during his school years, specifically in 9th grade. In addition, he has also been involved in writing essays and sharing his thoughts on technology, startups, and programming.",

"config": {

"hallucination": {

"detector_name": "default"

}

}

}

]

[

{

"hallucination": {

"is_hallucinated": "True",

"score": 0.7407,

"sentences": [

{

"score": 0.7407,

"text": "Paul Graham has worked in several key areas throughout his career: IBM 1401: He began programming on the IBM 1401 during his school years, specifically in 9th grade."

},

{

"score": 0.03326,

"text": "In addition, he has also been involved in writing essays and sharing his thoughts on technology, startups, and programming."

}

]

}

}

]

The is_hallucinated field indicates whether the generated_text (passed in the input) is hallucinated. A top level score field indicates

if the entire paragraph contained any hallucinations. The score is a probability measure of how hallucinated

the text is compared to the context. The range of the score is [0, 1]. The higher the score, the more hallucinated the text is.

A score in the range 0.5-0.7 is considered a mild hallucination, while a score above 0.7 is considered a strong hallucination.

A score in the range of 0.4-0.5 is considered a borderline case of hallucination.

In most cases, we consider a score of 0.5 or higher as a hallucination. The sentences field contains the hallucinated sentences and their respective scores.

Code Example

The below example demonstrates how to use the hallucination detector in a synchronous manner.

We also support asynchronous computation. Use async=True to use it asynchronously. When you set publish=True, the results are published to the AIMon UI.

- Python

- TypeScript

from aimon import Detect

import os

# This is a synchronous example

# Use async=True to use it asynchronously

# Use publish=True to publish to the AIMon UI

detect = Detect(

values_returned=['context', 'generated_text'],

config={"hallucination": {"detector_name": "default"}},

publish=True,

api_key=os.getenv("AIMON_API_KEY"),

application_name="my_awesome_llm_app",

model_name="my_awesome_llm_model"

)

@detect

def my_llm_app(context, query):

my_llm_model = lambda context, query: f'''I am a LLM trained to answer your questions.

But I hallucinate often.

The query you passed is: {query}.

The context you passed is: {context}.'''

generated_text = my_llm_model(context, query)

return context, generated_text

context, gen_text, aimon_res = my_llm_app("This is a context", "This is a query")

print(aimon_res)

# DetectResult(status=200, detect_response=InferenceDetectResponseItem(result=None, hallucination={'is_hallucinated': 'True', 'score': 0.997, 'sentences': [{'score': 0.78705, 'text': 'I am a LLM trained to answer your questions.'}, {'score': 0.70234, 'text': 'But I hallucinate often.'}, {'score': 0.997, 'text': 'The query you passed is: This is a query.'}, {'score': 0.27976, 'text': 'The context you passed is: This is a context.'}]}), publish_response=[])

import Client from "aimon";

// Create the AIMon client using an API Key (retrievable from the UI in your user profile).

const aimon = new Client({ authHeader: "Bearer API_KEY" });

const runDetect = async () => {

const generatedText = "your_generated_text";

const context = ["your_context"];

const userQuery = "your_user_query";

const config = { hallucination: { detector_name: "default" } };

// Analyze the quality of the generated output using AIMon

const response = await aimon.detect(

generatedText,

context,

userQuery,

config,

);

console.log("Response from detect:", response);

}

runDetect();