Instruction Adherence

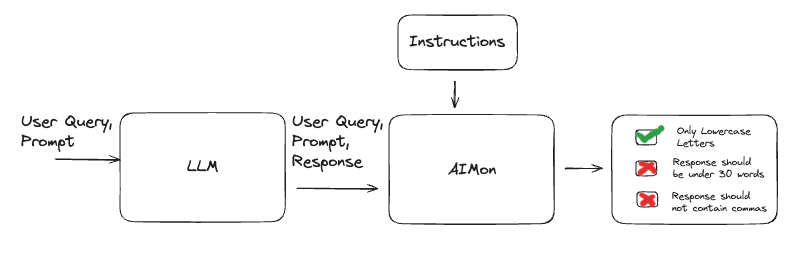

Given a set of “instructions”, the generated text, the input context and the user query, this API is able

to check whether the generated text followed all the instructions specified in the instructions field.

- Request

- Response

[

{

"context": "Paul Graham is an English-born computer scientist, entrepreneur, venture capitalist, author, and essayist. He is best known for his work on Lisp, his former startup Viaweb (later renamed Yahoo! Store), co-founding the influential startup accelerator and seed capital firm Y Combinator, his blog, and Hacker News.",

"instructions": "Write a summary of Paul Graham's career and achievements.",

"generated_text": "Paul Graham has worked in several key areas throughout his career: IBM 1401: He began programming on the IBM 1401 during his school years, specifically in 9th grade. In addition, he has also been involved in writing essays and sharing his thoughts on technology, startups, and programming.",

"config": {

"instruction_adherence": {

"detector_name": "default"

}

}

}

]

[

{

"instruction_adherence": {

"results": [

{

"adherence": false,

"detailed_explanation": "The response provides a very limited overview of Paul Graham's career, only mentioning his early programming on the IBM 1401 and general involvement in writing essays. It fails to cover significant achievements such as his work on Lisp, co-founding Y Combinator, and his influence in the tech and startup world.",

"instruction": "Write a summary of Paul Graham's career and achievements."

}

],

"score": 0.0

}

}

]

Code Example

The below example demonstrates how to use the instruction adherence detector in a synchronous manner.

- Python

- TypeScript

from aimon import Detect

import os

# This is a synchronous example

# Use async=True to use it asynchronously

# Use publish=True to publish to the AIMon UI

detect = Detect(

values_returned=['context', 'generated_text', 'instructions'],

config={"instruction_adherence": {"detector_name": "default"}},

publish=True,

api_key=os.getenv("AIMON_API_KEY"),

application_name="my_awesome_llm_app",

model_name="my_awesome_llm_model"

)

@detect

def my_llm_app(context, query):

my_llm_model = lambda context, query: f'''I am a LLM trained to answer your questions.

But I often do not follow instructions.

The query you passed is: {query}.

The context you passed is: {context}.'''

generated_text = my_llm_model(context, query)

instructions = '1. Ensure answers are in english 2. Ensure there are no @ symbols'

return context, generated_text, instructions

context, gen_text, ins, aimon_res = my_llm_app("This is a context", "This is a query")

print(aimon_res)

# DetectResult(status=200, detect_response=InferenceDetectResponseItem(result=None, instruction_adherence={'results': [{'adherence': True, 'detailed_explanation': 'The response is entirely in English and successfully communicates the generated content.', 'instruction': 'Ensure answers are in english'}, {'adherence': True, 'detailed_explanation': "The response does not contain any '@' symbols.", 'instruction': 'Ensure there are no @ symbols'}], 'score': 1.0}), publish_response=[])

import Client from "aimon";

// Create the AIMon client using an API Key (retrievable from the UI in your user profile).

const aimon = new Client({ authHeader: "Bearer API_KEY" });

const runDetect = async () => {

const generatedText = "your_generated_text";

const context = ["your_context"];

const userQuery = "your_user_query";

const config = { instruction_adherence: { detector_name: "default" } };

// Analyze the quality of the generated output using AIMon

const response = await aimon.detect(

generatedText,

context,

userQuery,

config,

);

console.log("Response from detect:", response);

}

runDetect();