Context Relevance

Context relevance is a critical aspect of Language Model (LM) evaluation, especially in the context of Large Language Models (LLMs) like GPT-4o. The relevance of context data plays a crucial role in determining the accuracy and quality of the responses generated by LLMs. However, evaluating the relevance of context data can be complicated and often subjective. In order to address these challenges, AIMon has developed purpose-built tools that enable users to assess and improve the relevance of context data in LLM-based applications. To address the challenge of subjectiveness in evaluating context relevance, AIMon has developed a customizable context relevance evaluator and a re-ranker model that provide a more efficient and accurate solution for evaluating context relevance.

Challenges with Traditional LLM Evaluation Methods

Traditional methods of evaluating context relevance in LLM-based applications have several limitations, including:

- Variance and inconsistency in results.

- Subjectiveness of evaluations.

- Cost inefficiency of using large off-the-shelf LLMs.

Read more about pros and cons of LLM Judges here.

AIMon's Approach to Context Relevance

AIMon's purpose-built and customizable relevance graders are designed to address the limitations of traditional evaluation methods. These tools enable users to assess the relevance of context data more accurately and efficiently, leading to improved performance and reliability of LLM-based applications. By using AIMon's relevance graders, users can customize the evaluation criteria to suit their specific needs and requirements, resulting in more precise and consistent evaluations.

The customization made during the evaluation can be immediately applied to the improve the re-ranking phase of the LLM application to bubble up the most relevant documents to the top.

See more about the re-ranker model here.

Task Definition

The task definition allows users to specify the domain or objective of the re-ranker, ensuring that context is evaluated based on the intended use case. By providing a structured task definition, users can improve context relevance assessments, enabling more precise ranking of retrieved documents for applications such as summarization, question answering, and retrieval-augmented generation (RAG).

Example

Pre-requisites

Before running, ensure that you have an AIMon API key. Refer to the Quickstart guide for more information.

Code Examples

Like any other evaluation, the context relevance evaluation can be done using the AIMon SDK. Here is an example of how to evaluate the relevance of context data using the AIMon SDK:

API Request & Response Example

- Request

- Response

[

{

"context": ["The capital of France is Paris.", "France is a country in Europe."],

"user_query": "What is the capital city of France?",

"task_definition": "Answer the user's question based on the provided context.",

"config": {

"retrieval_relevance": {

"detector_name": "default"

}

}

}

]

[

{

"retrieval_relevance": [

{

"explanations": [

"Document 1 directly answers the query by stating that the capital of France is Paris, which is the specific information being sought. The high relevance score indicates a strong match due to the precise alignment with the query's focus on the capital city. There are no aspects of this document that do not match the query, as it provides the exact answer required.",

"2. Document 2 mentions that France is a country in Europe, which is related to the broader context of France but does not address the specific question about its capital city. The relevance score is lower because it lacks the crucial information that the query seeks, which is specifically about the capital rather than general information about France. Therefore, while it provides some context, it fails to answer the query directly."

],

"query": "What is the capital city of France?",

"relevance_scores": [

55.23201018550026,

35.53204461040309

]

}

]

}

]

Code Examples

- Python (Sync)

- Python (Async)

- Python (Decorator)

- TypeScript

# Synchronous example

import os

from aimon import Client

import json

# Initialize client

client = Client(auth_header=f"Bearer {os.environ['AIMON_API_KEY']}")

# Construct payload

payload = [{

"generated_text": "The product ships within 2 business days.",

"user_query": "How long will it take for my order to arrive?",

"context": ["Our shipping policy states delivery in 5–7 business days."],

"task_definition": "Answer the user's question based on logistics data.",

"config": {

"retrieval_relevance": {

"detector_name": "default"

}

},

"publish": False

}]

# Call sync detect

response = client.inference.detect(body=payload)

# Print result

print(json.dumps(response[0].retrieval_relevance, indent=2))

# Aynchronous example

import os

import json

from aimon import AsyncClient

aimon_api_key = os.environ["AIMON_API_KEY"]

aimon_payload = {

"generated_text": "Marseille is a beautiful port city in southern France.",

"context": ["Marseille is a coastal city located in the Provence-Alpes-Côte d'Azur region."],

"user_query": "Tell me about Marseille.",

"task_definition": "Grade how relevant the context is to the user query.",

"config": {

"retrieval_relevance": {

"detector_name": "default",

}

},

"publish": True,

"async_mode": True,

"application_name": "async_metric_example",

"model_name": "async_metric_example"

}

data_to_send = [aimon_payload]

async def call_aimon():

async with AsyncClient(auth_header=f"Bearer {aimon_api_key}") as aimon:

return await aimon.inference.detect(body=data_to_send)

# Await and confirm

resp = await call_aimon()

print(json.dumps(resp, indent=2))

print("View results at: https://www.app.aimon.ai/llmapps?source=sidebar&stage=production")

import os

from aimon import Detect

detect = Detect(

values_returned=["context", "user_query", "task_definition"],

config={

"retrieval_relevance": {

"detector_name": "default"

}

},

api_key=os.getenv("AIMON_API_KEY"),

application_name="application_name",

model_name="model_name"

)

@detect

def retrieval_relevance_test(context, user_query, task_definition):

return context, user_query, task_definition

context, user_query, task_definition, aimon_result = retrieval_relevance_test(

["The capital of France is Paris.", "France is a country in Europe."],

"What is the capital city of France?",

"Answer the user's question based on the provided context."

)

print(aimon_result)

import Client from "aimon";

import dotenv from "dotenv";

dotenv.config();

const aimon = new Client({

authHeader: `Bearer ${process.env.AIMON_API_KEY}`,

});

const run = async () => {

const response = await aimon.detect({

context: [

"The capital of France is Paris.",

"France is a country in Europe.",

],

userQuery: "What is the capital city of France?",

taskDefinition: "Answer the user's question based on the provided context.",

config: {

retrieval_relevance: {

detector_name: "default",

},

},

});

console.log("AIMon response:", JSON.stringify(response, null, 2));

};

run();

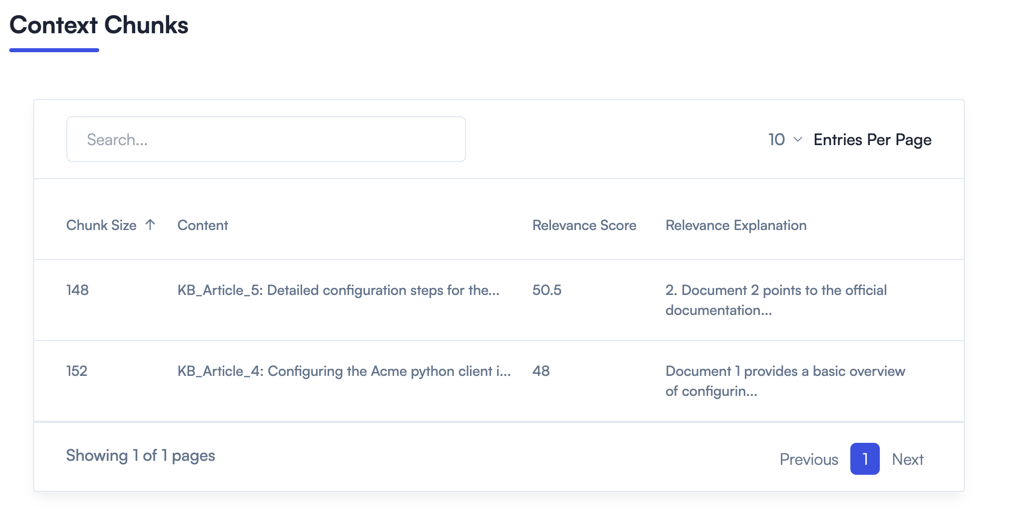

Here is an example on how the metrics look on the AIMon UI: